Make some notes about Entropy, Cross-Entropy and KL-divergence. Here is zhihu link.

Entropy:

$$

H(P)=-\sum_xPx\cdot log\ Px

$$

Cross-Entropy:

$$

H(P,Q)=-\sum_xPx\cdot log\ Qx

$$

KL-divergence:

$$

KL(P||Q)=-\sum_xPx\cdot log\frac{Qx}{Px}\=H(P,Q)-H(P)

$$

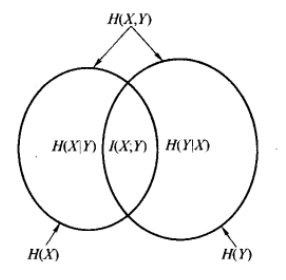

Mutual Information:

$$

I(X;Y)=\sum_{x}\sum_{y}p(x,y)log\frac{p(x,y)}{p(x)p(y)}\=\sum_x\sum_yp(x,y)log\frac{p(x|y)}{p(x)}\=\sum_x\sum_yp(x,y)logp(x|y)-\sum_xp(x)logp(x)\=H(X)-H(X|Y)

$$

When the probability distribution is continuous, just replace Sum notation with Integral.